US elections are proverbially esoteric and complicated, with a big chunk of this complexity coming from the existence of the Electoral College.

And we are familiar with the existence of “swing states” - those in the middle of the political spectrum, and which are likely to determine the result. But what if we came to an electoral year with a state that was so large (in Electoral vote terms), and so “swingy” (in terms of being in the middle of the political spectrum) that winning there seems to be the single determinant of the election.

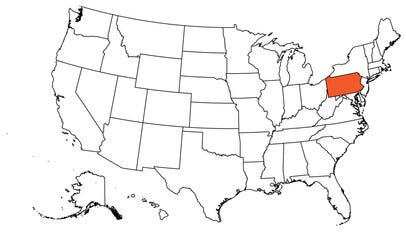

In 2024, it looks as though we might be in a situation quite close to this, with the state of Pennsylvania.

There is an opinion out there that in order to understand who will win the Presidency in November, we can skip all the exciting complexity and divergence in opinions between the poll aggregators and modellers — all we really need to do is to determine who will win Pennsylvania.

But is this right?

If in doubt, define your terms

There is a mathematical way of making this vague idea precise, known as “information gain” (or, if you want to sound smart: as “Kullback-Leibler divergence”).

Roughly, this measure looks at some outcome (e.g., the US presidential election), and asks how much - in probabilistic terms - we already know about the outcome (for a binary outomce, 50:50 means we don’t know much, 99:1 means we know a lot). Then it asks again how much we know about each outcome in probabilistic terms, if we did know about some other outcome (e.g., here, the outcome in a particular state).1

Let’s use some made-up numbers, to illustrate what’s going on.

It’s time for some information theory

The crucial quantity here is “information entropy”. If the overall race is pretty much a toss-up (it is) then we can say that each of the binary outcomes (Harris win, Trump win) are about 50%. Then our entropy is given by the sum of each outcome multiplied by the log-base-2 of that outcome (that is, its Shannon entropy). If we do this for a simple 50:50 outcome, we get the answer of 1 bit. That is, we currently - effectively - have no information whatsoever about a binary outcome. Telling us the result “Trump wins” or “Harris wins” would gain us precisely one bit of information: we had no clue before, and now we know.

Note that this entropy gain in the toss-up case is much larger than if the race was a near certainty for one side or another. If one candidate had - say - 95% probability of winning, then the entropy would be 0.29. Or - in other words - it’s likely that knowing the result would just confirm the 95% probability, and there’s only a 5% chance we’d get some really surprising news. On average, given our expectations, this gains us much less information: 0.29 of a bit.

This is in accord with intuition: learning the result of a race we were pretty sure of, is likely to give us less information than learning the result of something that was pretty even.

Now, let’s apply this thinking to a case where the result in some US state also gives us a lot of information about the overall national result. Let’s assume there’s a big important swing state where there’s a 50% chance that Harris wins it, but if she wins it, her chance of winning the overall Presidency jumps to 90%. And - symmetrically - there’s a 50% chance that Trump wins it, but if he does, then - likewise - his overall win probability jumps to 90%. The entropies of these two “sub-outcomes” are much smaller than the overall: they’re about 0.47 each. This gives us a mathematically precise measure of how much “knowing” the outcome of this state, changes the amount of information we have about the overall Presidency.

Now, in order to compare these Shannon information-measures of these two sub-outcomes to the overall, we calculate the a weighted average of these two outcomes (they’re both 50% likely, so the average is just 0.47 again). This gives us a new measure of the joint outcome once we know the new information about the state vs when we did not. It’s a dramatic gain, it’s come down from 1 bit (before we knew the state result) to 0.47 bit (after we found out, one way or the other) - a gain of 0.53 bits.

This “information gain” number is exactly what we are after: a measure of how much information the result for each state gives you about the national result, and we can easily calculate it for every state’s results. Using Nate Silver’s model, which presents the information in a helpful manner from his simulations, we can make the information gain precise. Overall, Silver has the race as a near toss-up (so the entropy is pretty near one: 0.997 to be precise), but knowing the results in each state can shift these probabilities a good way.

Here’s the results:

And this is in keeping - broadly - with conventional wisdom of where the “swing"-states are. The ordered chart gives the answer to the question: if you awoke from a coma after the election result, and weren’t allowed to know the overall result, but could ask about the result for just one state, which should you ask about to give you the most information on the overall result? And the answer is - by a mile - Pennsylvania. It gives you an information gain of nearly 2/3 from your original state.

Note of course that this measure is model-dependent - other models will give slightly different answers to Silver’s, but the big picture: with the result in Pennsylvania being head and shoulders above the also-rans - will be pretty consistent.

So why is this a problem for poll aggregators?

In a close race - as we have at the moment - then a reasonably specified model will be very sensitive to what is known about each candidate’s chances in Pennsylvania. And so right now, the overall chances are very sensitive to the results just one or two polls in just one state. This feature was enough to make Silver’s model at the end of August (the 29th) “jump” from signalling that Harris’ chances were better, to showing Trump’s chances were better, simply because of a few bad quality (and one good quality) poll in one state. This in turn, led to a defensive-sounding note being posted on Silver’s main page, trying to explain why it had happened. Then there was more polling released last night, which was more positive for Harris, and once that and new polls for Pennsylvania come through, the lines will likely jump again. Aggregators hate that kind of twitchiness to the smallest scrap of news - it’s precisely what they are trying to avoid.

Their problem is that the the whole point of aggregating up large amounts of polling and other data to give a large number of signals, no one of which is definitive, and therefore reduce the uncertainty associated with a read from “just one poll” - we are meant to be able to aggregate our way away from this kind of sensitivity. And yet here we are - with the mere release date of polling from just one firm in just one state, shifting the needle back and forth.

In the meantime, those of us who are not sure whether to trust election models, are fatigued by the complexity and the whole idea of the multiple “paths to victory” can allow themselves a small smile. National polls, most state polls, approval ratings … it’s all noise. If you want to know who’s going to win, ignore everything but the race in Pennsylvania, and you’ll get very nearly as good information as anyone else.2

Logarithms are involved. Don’t panic.

Of course this is no fun. What you actually want is a side-by-side comparison of the results from all of these aggregators and modellers, a comparison to the betting market odds, and reviews of the methodology of each, so you can understand what they agree on, and how, where and why they differ. And you’re in luck.