So many post mortems of the election results already out there. Just a very brief thought on a reaction that’s coming out from many quarters - that the “polls were all right after all”.

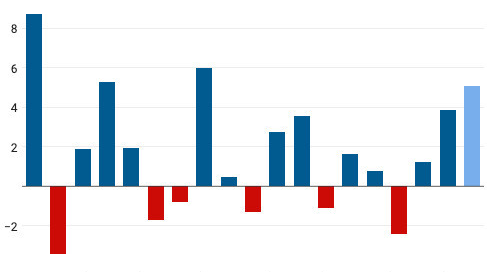

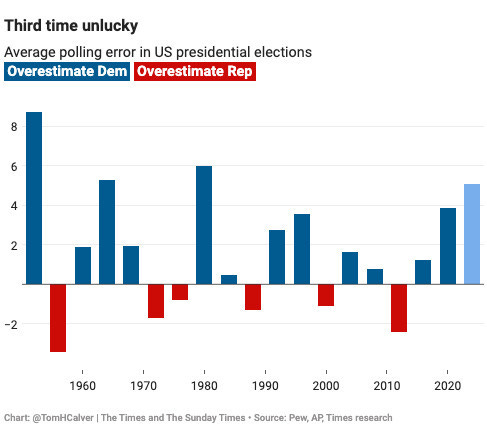

They weren’t. It’s true to say that they had errors pretty much in line with historical levels1 … but that is to say: they were really awful. Here’s the top-line/popular vote miss.

And then you drill get down to the numbers that actually decide the result: the individual state-level vote shares. The errors here was were also large, and worse - they were highly correlated in one direction.

To illustrate this - let’s see how the final polling margin averages2 compared to the result (so far) in the individual states. Here’s a visual look at them (Showing Trump minus Harris margin in each case: polls = start of each arrow, result = end of each arrow). Red where the polls underestimated Trump, Blue where the polls underestimated Harris.

The trouble with this is that the errors don’t look too enormous in comparison with the differences between the individual states. So let’s take an objective measure.

Error metrics are a big topic in statistics and its various aliases (machine learning, deep learning, AI), but let’s go for the one this Substack is named after: the “Mean Squared Error”. That is, the average of the differences between the estimate and the result, squared and then averaged. We often take the square root of this number, so that the units are the same as the thing you are measuring - in this case percentage points.

So, the (square root of) the mean squared error of the 563 state-level results here is 3.7ppts. Which is a reasonable measure to give as the “average” state level polling miss.4

In terms of being any decent guide to the overall result, you have to say that this is at least unsatisfactory. The seven “swing” states - the ones that were always going to decide the election - were all polling within one or two percentage points of going one way or the other.

So a miss with a (root) MSE of 3.7ppts, very highly correlated in one direction, this simply means that all the swing states would go that way. Which they did - to Trump.

No, I mean BAD

But - the polling defenders might say - this is simply an unrealistic standard. It’s not the error itself that’s the issue here, it’s how close the race was. You just can’t expect any methodology to do much better than 3.7ppts RMSE, and we’re just unlucky this cycle that the race was so close that this kind of miss was critical.

OK. Let’s take that Mean Squared Error measure, and instead of comparing the final 2024 polls to what they were supposed to be to giving a guide to: the 2024 result, what happens if you compare them to the 2020 result instead?

Now, let’s be clear - none of the polls were meant to be measuring 2020 results, or even be a good guide to it. If anything they are meant to tell us how much things had changed since 2020. It just gives a convenient benchmark for how much they’re managing to give an accurate view of this election versus any other one. The

Aaaand, the root mean squared error of the state level 2024 final polls in predicting the 2020 results is better. Much better: 2.4ppts.

This is a little absurd, so let me emphasise the point: the final polls for 2024 - as aggregated by competent modellers - were a better guide to the 2020 Presidential results than they were to the 2024 results.

That’s not fine.

Rather, it gives a hint that in that storm of weighting, sampling and other odd-looking operations pollsters have to get up to in order to produce a balanced sample … all appear to work better to reproduce the last result than they do to give an accurate read on the current state of play.

People often criticise political campaigns for their mistakes in “fighting the last war”. In this case, there is a clear explanation for this: the polling that is supposed to be giving them a view of this years war is better - literally - at showing them one from four years ago.

This is what’s led me to warn several times against confusing an uncertain race with a close race.

As compiled by FiveThirtyEight in this case, but other aggregators will give similar results.

50 states plus DC and Maine and Nebraska’s split votes.

An alternative would be the average absolute error, but I named the stack after MSE, so I’ll stick to it.

It's super interesting that accuracy isn't getting better over time. Quite counter intuitive. Do you think it ties in with the issues ONS are having getting people to respond? Volume wise the political polls get lots of people but clearly not the right mix.

Nice to think that the polls will become less prominent in the news cycle given the inaccuracies. Probably wishful thinking.

Not sure I buy this.

For one thing, the RMS error in the battleground states is 2.8. That drops to 2.3 if you put aside Nevada, which everyone knew was going to be hard to poll, or 2.5 if you leave Nevada in but weight by Electoral College vote. The polls will probably have done a less good job of getting the popular vote, since the swing towards Trump seems to have been higher outside the battleground states, but that wasn't really what they were trying for.

For another, the swing towards Trump was fairly uniform across the swing states (EV-weighted average 3.4, EV-weighted RMS difference from average 1.6). So, sure, if there's a reasonably uniform swing towards Trump and a polling error that underrates Trump, the polls will predict 2020 better than 2024, but I don't see that says anything much about their accuracy.

I do agree that three consecutive errors in presidential years in the same direction is indicative of something.